Why go multi-threaded / multi-core?

Having gotten some ideas of the mechanics behind the Source engine's transition to multi-core, we were keen to find out what had really motivated this move. Yes, there's new hardware arriving in the form of Kentsfield, but other development houses are not moving as quickly as Valve is when it comes to embracing the technology.Gabe Newell has a fairly easy answer. "If we're right, other people are going to take a long time to get the multi-threaded versions of their engines out. If we're right with the approach we've taken - which is to iterate and build on top of Source - we can get there a couple of years ahead of where they could be." In other words, Source can be at the cutting edge of engine technology, which makes it very attractive both to consumers and licensees.

"It makes performance more of a software problem in this generation of gaming than a hardware problem. That's not really where we'd like it to be, but that's the reality. But with this investment we're making into the Source engine, we really think that there are games that we can build that other companies that don't make this investment won't be able to build."

The future of multi-core

In the near future, multi-core is about moving from dual-core processors to quad-core processors. Valve is clearly excited about the new Intel chip. Gabe Newell gives us details on his system buying policy - "We've been holding off buying any new systems here at work. Everything we're buying now, anything that needs replacing in the future - we're replacing it with Kentsfield."How about AMD's 4x4 platform, which uses two FX processors for effective quad core - and its native quad-core part coming out next year? AMD has made a lot of noise about how its native quad-core part is going to be so much faster than Kentsfield, which uses a dual-core, dual-die design. Is that noise really warranted? Tom tells us: "Depending on how you choose to thread things, you could bring out performance differences across different quad-core architectures. But we're really trying to avoid that by working out the correct levels of granularity." Chris Green adds: "We've tried to avoid worst case performance in places like that. To be honest, we're more concerned with main memory bandwidth than the type of core interconnect." So, whilst there could be theoretical differences in performance between native and non-native quad-core, don't expect to see massive differences in practice.

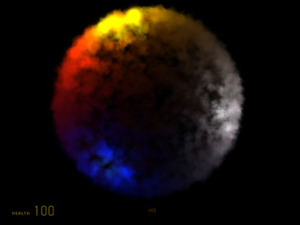

But the chance of physics cards taking off is pretty minimal if we draw inferences from the views of Newell on hardware. He talks enthusiastically of the "Post-GPU world" - rather like the one envisaged by AMD and ATI with their Fusion project. In this world, we see a number of homogenous CPU cores all tasked with different projects - including graphics rendering. This allows for more flexibility when it comes to splitting up workloads, and means that engine-functions such as AI and physics can become a more integral part of the gaming experience because of the scalability such an architecture adds. "All of a sudden," raves Gabe, "If your AI isn't running fast enough, you can lower your graphics resolution. That's some awesome flexibility."

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.